#50=,9:0;@6-,5;<*2@#50=,9:0;@6-,5;<*2@

#56>3,+.,#56>3,+.,

"/,:,:(5+0::,9;(;065:.90*<3;<9(3

*65640*:

#56>3,+.,

" !!&!"" " !!&!""

"!!"!!

)+,3(A0A(>(50

#50=,9:0;@6-,5;<*2@

()+,3(>(50<2@,+<

0.0;(3)1,*;+,5;0C,9/;;7:+6069.,;+

0./;*30*2;667,5(-,,+)(*2-69405(5,>;();63,;<:256>/6>;/0:+6*<4,5;),5,C;:@6< 0./;*30*2;667,5(-,,+)(*2-69405(5,>;();63,;<:256>/6>;/0:+6*<4,5;),5,C;:@6<

,*644,5+,+0;(;065 ,*644,5+,+0;(;065

(>(50)+,3(A0A" !!&!"" "!

!

"/,:,:(5+0::,9;(;065:.90*<3;<9(3*65640*:

/;;7:<256>3,+.,<2@,+<(.,*65',;+:

"/0:6*;69(30::,9;(;0650:)96<./;;6@6<-69-9,,(5+67,5(**,::)@;/,#56>3,+.,(;#56>3,+.,;/(:

),,5(**,7;,+-6905*3<:06505"/,:,:(5+0::,9;(;065:.90*<3;<9(3*65640*:)@(5(<;/690A,+(+4050:;9(;696-

#56>3,+.,69469,05-694(;06573,(:,*65;(*;#56>3,+.,3:=<2@,+<

!"#" "!"#" "

9,79,:,5;;/(;4@;/,:0:69+0::,9;(;065(5+():;9(*;(9,4@690.05(3>692967,9(;;90)<;065

/(:),,5.0=,5;6(336<;:0+,:6<9*,:<5+,9:;(5+;/(;(4:63,3@9,:765:0)3,-696);(0505.

(5@5,,+,+*67@90./;7,940::065:/(=,6);(05,+5,,+,+>90;;,57,940::065:;(;,4,5;:

-964;/,6>5,9:6-,(*/;/09+7(9;@*67@90./;,+4(;;,9;6),05*3<+,+054@>692(336>05.

,3,*;9650*+0:;90)<;0650-:<*/<:,0:56;7,940;;,+)@;/,-(09<:,+6*;905,>/0*/>033),

:<)40;;,+;6#56>3,+.,(:++0;065(303,

/,9,)@.9(5;;6"/,#50=,9:0;@6-,5;<*2@(5+0;:(.,5;:;/,099,=6*()3,565,?*3<:0=,(5+

96@(3;@-9,,30*,5:,;6(9*/0=,(5+4(2,(**,::0)3,4@>69205>/63,69057(9;05(33-694:6-

4,+0(56>69/,9,(-;,9256>5(.9,,;/(;;/,+6*<4,5;4,5;065,+()6=,4(@),4(+,

(=(03()3,044,+0(;,3@-69>693+>0+,(**,::<53,::(5,4)(9.6(7730,:

9,;(05(336;/,96>5,9:/0790./;:;6;/,*67@90./;6-4@>692(3:69,;(05;/,90./;;6<:,05

-<;<9,>692::<*/(:(9;0*3,:69)662:(33697(9;6-4@>692<5+,9:;(5+;/(;(4-9,,;6

9,.0:;,9;/,*67@90./;;64@>692

$% $" $% $"

"/,+6*<4,5;4,5;065,+()6=,/(:),,59,=0,>,+(5+(**,7;,+)@;/,:;<+,5;B:(+=0:6965

),/(3-6-;/,(+=0:69@*6440;;,,(5+)@;/,09,*;696-9(+<(;,!;<+0,:!65),/(3-6-

;/,796.9(4>,=,90-@;/(;;/0:0:;/,C5(3(7796=,+=,9:0656-;/,:;<+,5;B:;/,:0:05*3<+05.(33

*/(5.,:9,8<09,+)@;/,(+=0:69@*6440;;,,"/,<5+,9:0.5,+(.9,,;6()0+,)@;/,:;(;,4,5;:

()6=,

)+,3(A0A(>(50!;<+,5;

90*/(,3 ,,+(16996-,::69

9(93 0336509,*;696-9(+<(;,!;<+0,:

THREE ESSAYS ON THE APPLICATION OF MACHINE LEARNING METHODS

IN ECONOMICS

DISSERTATION

A dissertation submitted in partial fulfillment of the

requirements for the degree of Doctor of Philosophy in the

College of Agriculture, Food and Environment

at the University of Kentucky

By

Abdelaziz Lawani

Lexington, Kentucky

Co-Directors: Dr. Michael Reed, Professor of

International Trade and Agricultural Marketing

and Dr. Yuqing Zheng, Associate Professor of

Food Marketing and Policy Analysis

Lexington, Kentucky

2018

Copyright © Abdelaziz Lawani 2018

ABSTRACT OF DISSERTATION

THREE ESSAYS ON THE APPLICATION OF MACHINE LEARNING METHODS

IN ECONOMICS

Over the last decades, economics as a field has experienced a profound transformation

from theoretical work toward an emphasis on empirical research (Hamermesh, 2013).

One common constraint of empirical studies is the access to data, the quality of the data

and the time span it covers. In general, applied studies rely on surveys, administrative or

private sector data. These data are limited and rarely have universal or near universal

population coverage. The growth of the internet has made available a vast amount of

digital information. These big digital data are generated through social networks, sensors,

and online platforms. These data account for an increasing part of the economic activity

yet for economists, the availability of these big data also raises many new challenges re-

lated to the techniques needed to collect, manage, and derive knowledge from them.

The data are in general unstructured, complex, voluminous and the traditional software

used for economic research are not always effective in dealing with these types of data.

Machine learning is a branch of computer science that uses statistics to deal with big data.

The objective of this dissertation is to reconcile machine learning and economics. It uses

threes case studies to demonstrate how data freely available online can be harvested and

used in economics. The dissertation uses web scraping to collect large volume of unstruc-

tured data online. It uses machine learning methods to derive information from the un-

structured data and show how this information can be used to answer economic questions

or address econometric issues.

The first essay shows how machine learning can be used to derive sentiments from re-

views and using the sentiments as a measure for quality it examines an old economic the-

ory: Price competition in oligopolistic markets. The essay confirms the economic theory

that agents compete for price. It also confirms that the quality measure derived from sen-

timent analysis of the reviews is a valid proxy for quality and influences price. The sec-

ond essay uses a random forest algorithm to show that reviews can be harnessed to pre-

dict consumers’ preferences. The third essay shows how properties description can be

used to address an old but still actual problem in hedonic pricing models: the Omitted

Variable Bias. Using the Least Absolute Shrinkage and Selection Operator (LASSO) it

shows that pricing errors in hedonic models can be reduced by including the description

of the properties in the models.

KEYWORDS: Machine Learning, Hedonic Price Model, Sentiment Analysis, Random

Forest, Omitted Variable Bias, LASSO

Abdelaziz Lawani

July 22

nd

, 2018

THREE ESSAYS ON THE APPLICATION OF MACHINE LEARNING METHODS

IN ECONOMICS

by

Abdelaziz Lawani

Prof. Michael Reed

Co-Director of Dissertation

Dr. Yuqing Zheng

Co-Director of Dissertation

Prof. Carl R. Dillon

Director of Graduate Studies

July 22

nd

, 2018

Anna-Liisa, T’del Karl, Marie-Madeleine, and Nathalia Nakaambo, this work is dedicat-

ed to you. When I embarked in this journey a few years ago, little did I know that it was

going to be not only intellectual but also emotional and spiritual. We learned, overcame

challenges, and grew together. You are the real Ph.Ds.!

iii

ACKNOWLEDGMENTS

The completion of this dissertation would not have been possible without the guidance

and advice of my advisors Prof. Reed Michael and Dr. Yuqing Zheng. I remember their

reactions when I first presented my idea to them a few years ago. It was a mix of curiosi-

ty, excitement, and encouragement. They offered me the freedom necessary to unleash

my intellectual curiosity, dig into challenging questions, gain the knowledge and skills

from other fields, and develop the confidence needed to address the questions subject of

this dissertation. Their suggestions and insightful comments have been crucial in turning

a raw idea into a polished topic.

I would extend my sincere appreciation to Prof. Leigh Maynard who arduously supported

me in expanding my passion for research for development and entrepreneurship. He

helped me look beyond the academia and use my knowledge and skills to address some

of the world most pressing challenges.

I also want to thank the staff, students, and faculty members of the Department of Agri-

cultural Economics at the University of Kentucky. It was comforting to see the familial

atmosphere in the department and their efforts to give the students the best education pos-

sible. Their support played a significant role in my success. I remember the words of en-

couragement of Rita, and Karen and the extraordinary efforts of Kristen helping me get

the materials needed for my works.

Finally, this dissertation would not have been possible without the support from my fami-

ly. My wife Anna-Liisa Ihuhwa was my rock. She provided me with the mental stamina

during the whole process. The process will also see the birth of our son T’del Karl. He

brought us joy and happiness, and his first steps are the inspiration behind this disserta-

tion. I saw him fall and crawl so many times, yet he never fails to always try one more

time. This mental image was my inspiration. The process would not be complete without

my family members in Benin and Namibia. I never felt alone during the journey because

you were always there.

TABLE OF CONTENTS

Acknowledgments.............................................................................................................. iii

Table of Contents ............................................................................................................... iv

List of Tables .................................................................................................................... vii

List of figures ................................................................................................................... viii

Chapter 1 : General introduction......................................................................................... 1

Chapter 2 : Impact of reviews on price: Evidence from sentiment analysis of Airbnb

reviews in Boston ................................................................................................................ 4

2.1. Abstract .................................................................................................................... 4

2.2. Introduction .............................................................................................................. 4

2.3. Literature review ...................................................................................................... 7

2.4. Conceptual framework ........................................................................................... 10

2.5. Data and Methods ................................................................................................... 15

2.5.1. Data ................................................................................................................. 15

2.5.2. Derivation of quality scores with sentiment analysis of the reviews .............. 18

2.6. Econometric results and discussion ........................................................................ 25

2.7. Sensitivity analysis ................................................................................................. 31

2.8. Conclusion .............................................................................................................. 38

Chapter 3 Chapter 3. Text-based predictions of beer preferences by mining online

reviews .............................................................................................................................. 40

3.1. Abstract: ................................................................................................................. 40

3.2. Introduction ............................................................................................................ 40

3.3. Literature review .................................................................................................... 43

3.4. Methodology .......................................................................................................... 47

3.4.1. Feature extraction ............................................................................................ 48

3.4.2. Feature selection ............................................................................................. 49

3.4.3. Predictive model: the random forest ............................................................... 51

v

3.5. Results and discussions .......................................................................................... 57

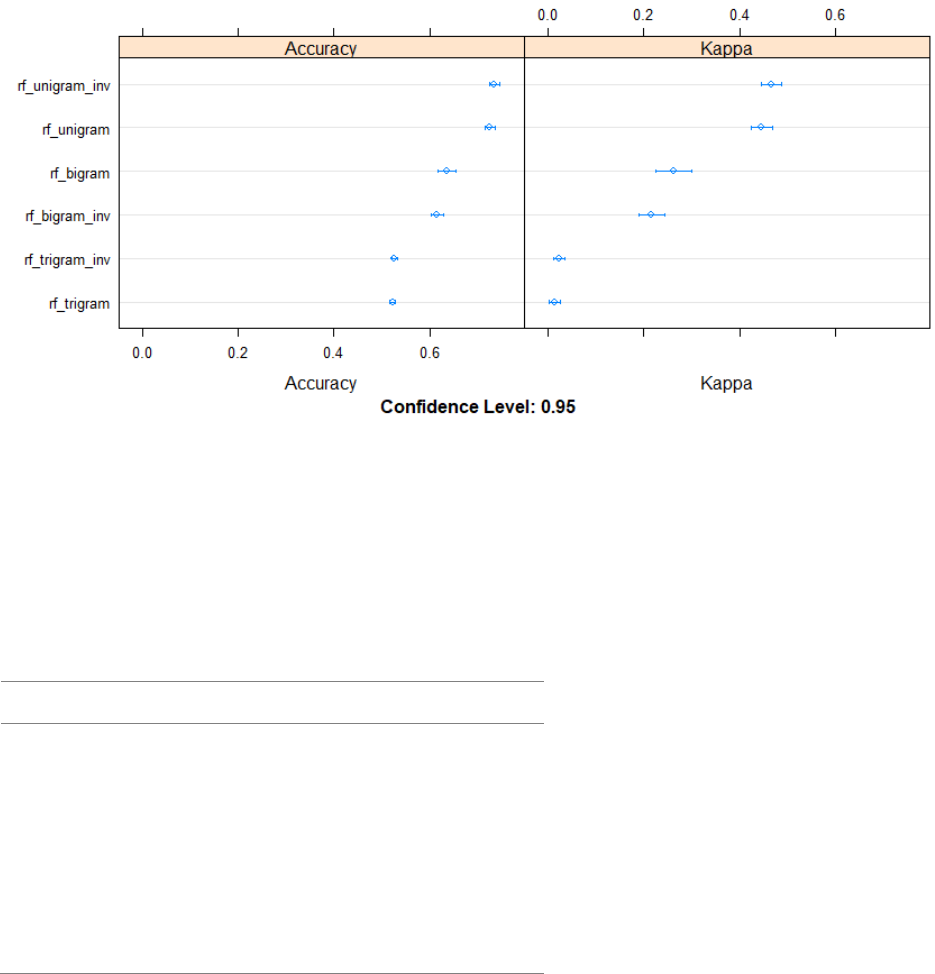

3.5.1. N-grams representation ................................................................................... 57

3.5.2. Term frequency vs inverse document frequency ............................................ 59

3.5.3. Performance analysis of the random forest classifier for the unigram-inv

model......................................................................................................................... 60

3.5.4. Effect of number of trees on the model accuracy ........................................... 62

3.5.5. Identification of the most important features in the reviews .......................... 63

3.6. Conclusion .............................................................................................................. 66

Chapter 4 : Textual analysis and omitted variable bias in hedonic price models applied to

short-term apartment rental market. .................................................................................. 68

4.1. Abstract .................................................................................................................. 68

4.2. Introduction ............................................................................................................ 68

4.3. Data and estimation procedure ............................................................................... 71

4.3.1. Data ................................................................................................................. 71

4.3.2. Estimation procedure ...................................................................................... 74

4.3.3. Unigram representation of the description of rental rooms ............................ 74

4.3.4. Penalized regression: The Least Absolute Shrinkage and Selection Operator

(LASSO) ................................................................................................................... 77

4.4. Results and discussion ............................................................................................ 79

4.4.1. Comparison of the regression models ............................................................. 79

4.4.2. LASSO estimates of the BOW-time-location fixed effects hedonic pricing

model......................................................................................................................... 82

4.4.3. Pricing value of the features. .......................................................................... 84

4.5. Conclusion .............................................................................................................. 87

Chapter 5 : General conclusion ......................................................................................... 89

Glossary ............................................................................................................................ 91

References ......................................................................................................................... 94

vi

Vita .................................................................................................................................. 106

vii

LIST OF TABLES

Table 2.1: Description and summary statistics of the variables ........................................ 17

Table 2.2: Sample of reviews and their score ................................................................... 20

Table 2.3: Moran I test ...................................................................................................... 22

Table 2.4: OLS regression diagnostic test for spatial dependence ................................... 22

Table 2.5: Estimates of the spatial lag regressions with 1 mile as weight matrix ............ 26

Table 2.6: Direct, indirect and total effects of the impact of the regressors on room price

........................................................................................................................................... 27

Table 2.7: Decomposition of the impact of review score for flexible, moderate, strict and

super strict cancellation policies ....................................................................................... 30

Table 2.8: Likelihood ratio tests for the statistical significance of price lag and quality

variables in the linear mixed effects models ..................................................................... 32

Table 2.9: Estimates of the spatial lag regression with 3 and 5 miles as weight matrix ... 34

Table 2.10: Impact of alternative measures of quality on price ........................................ 36

Table 2.11: Decomposition estimates of the direct and indirect effects of quality variables

on rooms' prices ................................................................................................................ 36

Table 3.1: Number of features selected with the Boruta algorithm .................................. 51

Table 3.2: Models accuracy and Kappa statistic ............................................................... 58

Table 3.3: Confusion matrix of the unigram-inverse random forest model ..................... 60

Table 4.1: Description and summary statistics for Airbnb data in San Francisco ............ 72

Table 4.2: Sample of description of the rental units on Airbnb in San Francisco ............ 73

viii

LIST OF FIGURES

Figure 2.1: Frequency of the languages used to write the reviews on Airbnb in Boston . 21

Figure 2.2: Boxplot of price by cancellation policy ......................................................... 29

Figure 3.1: Performance comparison of the unigram, bigram, trigram models and their

inverse ............................................................................................................................... 58

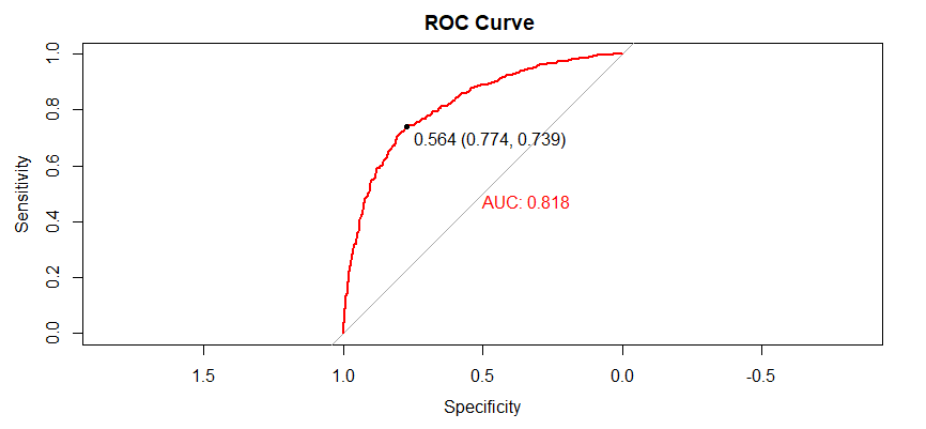

Figure 3.2: Receiver Operator Curve (ROC) and Area Under the Curve (AUC) for the

unigram-inverse random forest model .............................................................................. 62

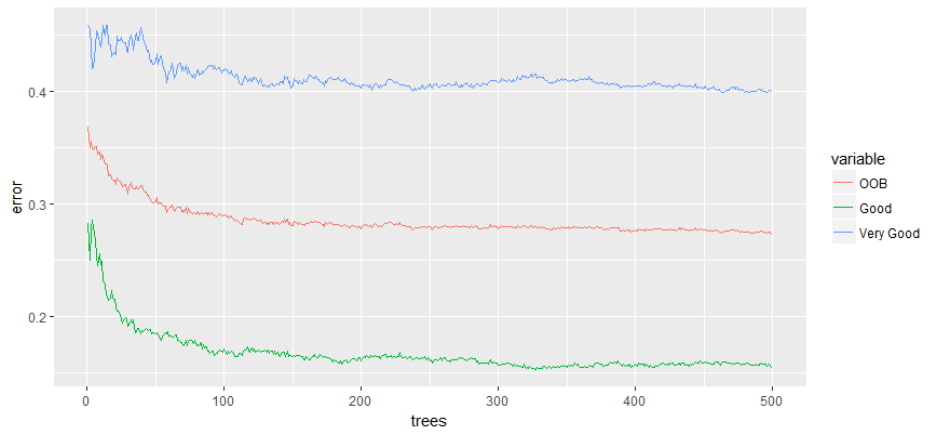

Figure 3.3: Effect of the number of trees on Out of Bag, Good, and "Very Good"

categories error rate estimates ........................................................................................... 63

Figure 3.4: Importance of the features in the unigram inverse predictive random forest

model................................................................................................................................. 65

Figure 4.1: Word cloud representation of the rental unit description on Airbnb in San

Francisco ........................................................................................................................... 76

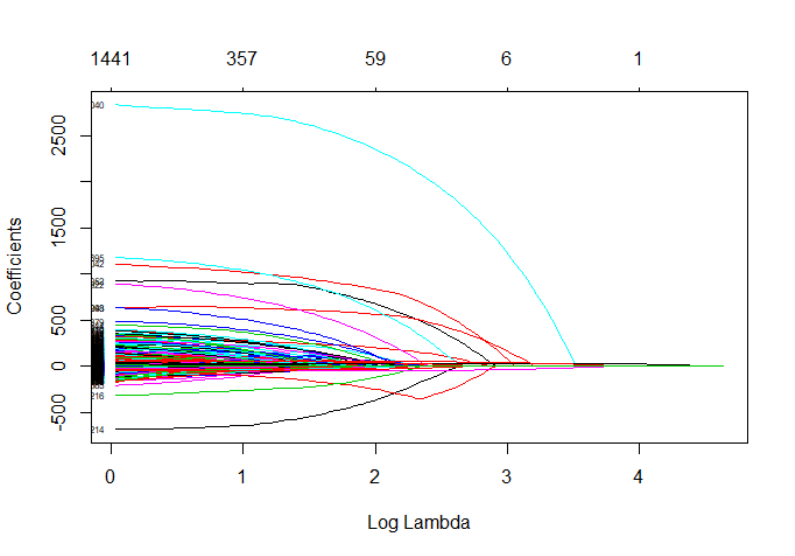

Figure 4.2: Number of features selected by the LASSO model as function of the Lambda

parameter ........................................................................................................................... 83

Figure 4.3: Cross-validated mean estimates of the MSE as a function of the Lambda

parameter ........................................................................................................................... 84

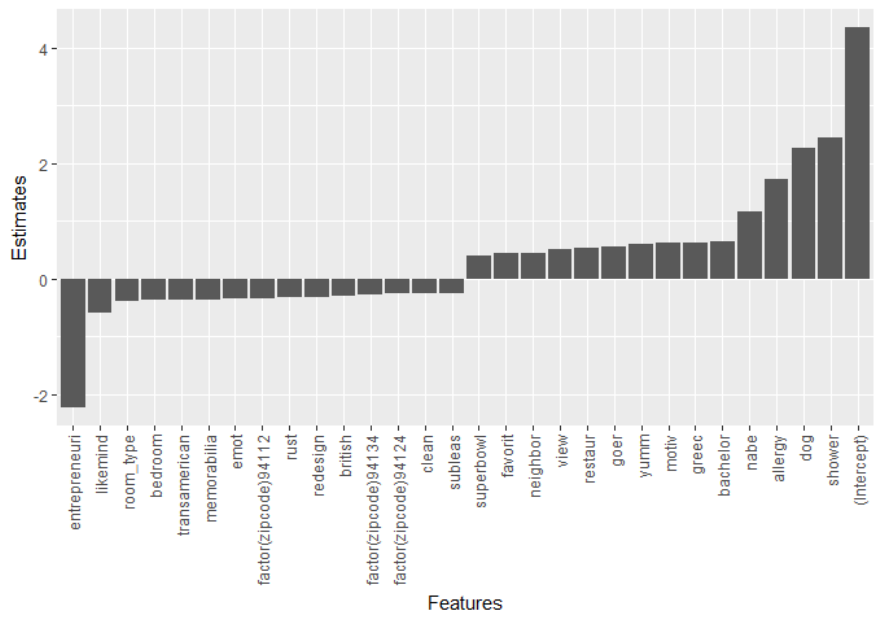

Figure 4.4: Estimates of the most important significant positive and negative variables . 85

1

CHAPTER 1 : GENERAL INTRODUCTION

Other the last two decades, the web 2.0 has reshaped the structures and conditions

of diverse markets such as transportation, travel, books, banking, energy, and healthcare.

Contrary to the first generation of static web pages on the internet, the web 2.0 refers to

dynamic pages such as social media or online platforms where the users can interact.

Online platforms such as Amazon, Netflix, eBay, Alibaba, Uber, LinkedIn, Zipcar, and

Airbnb are now household names. They create value by facilitating the transaction of

products and services between two or more economic agents who would otherwise have

difficulty finding each other (Evans et al. 2011). Online platforms offer the possibilities

for different agents to interact and record the nature and content of the billions of interac-

tions and transactions that occur on the platforms. They are disrupting major industries,

and the volume of transactions on these platforms is consistently increasing. According to

the U.S. Census Bureau (2017), online sales accounted for 8.2 percent of total sales in the

second quarter of 2017 and rose by 16.3 percent compared to the second quarter of 2016;

total retail sales increased by only 4.4 percent during the same period. As the volume of

transactions for online platforms increases, so do the number of people using these plat-

forms and the size of data generated by the platforms. However, applied research on plat-

forms in the economics literature has not followed the same growth.

The development of online platforms has made available a considerable volume of data.

Social networks, geo-location, impressions through tweets, online purchases, and mobile

phone data, are a few examples of data sources that can allow novel research in social

science. For economists, the availability of this amount of data is an opportunity to ob-

2

serve consumers’ revealed preferences through their behavior online. These data can also

address some econometrics issues (e.g., omitted variable bias and instrumental variables)

faced when estimating causal relationships in non-experimental designs. The availability

of these big data also raises many new challenges related to the techniques needed to col-

lect, manage, and derive knowledge from them. These challenges can be overcome by

borrowing the techniques and skills needed to deal with big data from other disciplines

such as statistics and computer science. Machine learning combines computer science

and statistics to handle and derive relevant information from big data, and the present dis-

sertation offers three essays on the application of machine learning methods in econom-

ics.

The first essay examines the relationship between guests reviews, used as a proxy for

quality, and the price set by hosts on the Airbnb platform in Boston. Using sentiment

analysis to derive the quality from the reviews and a hedonic spatial autoregressive model

applied to rental room prices on Airbnb, the finding of this essay suggest that prices are

strategic complements and are influenced by the review score, the characteristics of the

room, and the features of the neighborhood. The marketing implication is that consumers

respond to the contents of online reviews, in addition to customer ratings. The results of

this essay show that policies that improve the quality of the room for one host will have a

spillover effect on the price of rooms offered by other hosts.

The second essay uses text categorization and random forest to predict beer preferences.

It compares six text categorization procedures: frequency terms of unigrams, bigrams,

trigrams, and their inverse frequency terms. With data scrapped from BeerAdvocate, an

online network of independent consumers and professionals in the beer industry, this es-

3

say shows that the words used in the reviews can predict consumer’s preferences. Moreo-

ver, it indicates that the use of less frequent terms in the predictive models outperforms

the use of more frequent terms, confirming Sparck Jones (1972)’s heuristics results.

However, low-level combinations of the words in the reviews better predict consumers’

preferences compared to high-level combinations, even though the latter better represent

the complexity of human languages.

The third essay addresses the omitted variable bias problem in hedonic pricing models

using unigram text categorization. The presence of omitted variables is a source of bias

for the estimates of hedonic models. The solutions adopted in the real estate literature

have struggled to deal effectively with this issue. This essay uses textual analysis to ad-

dress the omitted variable bias problem. It explores a method of proxying the variables

omitted in the hedonic regression models with the words used in the description of the

rental units. The results show that this solution reduces the pricing error in the hedonic

models and can be useful in accounting for omitted quality measures in hedonic price

models.

4

CHAPTER 2 : IMPACT OF REVIEWS ON PRICE: EVIDENCE FROM

SENTIMENT ANALYSIS OF AIRBNB REVIEWS IN BOSTON

2.1. Abstract

There is a growing interest in deriving value from user-generated comments and reviews

online. For businesses and consumers using online platforms, the reviews serve as quality

metrics and influence consumers purchasing decision. This study examines the

relationship between guests reviews, used as a proxy for quality, and the price set by

hosts on the Airbnb platform in Boston. Using sentiment analysis to derive the quality

from the reviews and a hedonic spatial autoregressive model applied to rental room prices

on Airbnb, we find that prices are strategic complements and are influenced by the

review score, the characteristics of the room, and the features of the neighborhood. The

marketing implication is that consumers respond to the contents of online reviews, in

addition to customer ratings. Policies that improve the quality of the room for one host

will have a spillover effect on the price of rooms offered by other hosts.

2.2. Introduction

Many platforms allow customers to write a review for the sellers they use or for

the products (or services) they purchase (e.g., eBay, Amazon, Priceline). This allows po-

tential consumers to go through multiple reviews about products or services before mak-

ing their purchasing decisions. They use the opinions in the reviews to form their own

opinion about the quality of the product or service they want to purchase. Reviews are

becoming even more important for experience goods such as hotel rooms and rental

houses, which are purchased at distance (Viglia, et al., 2016) with the quality being hard

for travelers to assess before consumption (Klein, 1998).

5

Researchers have shown increasing interests in understanding the opinions and

feelings hidden in the millions of reviews left by consumers online (Liu, et al., 2005,

Pang and Lee, 2008). Reviews, scored or rated in terms of satisfaction by customers, in-

fluence purchase probability of online shoppers (Kim and Srivastava, 2007). Different

schemes of rating are used on online platforms. They commonly vary from bimodal,

thumbs up or thumbs down, to scale from one to five stars (Sarvabhotla, et al., 2010).

According to Archak, et al. (2011), numerical or bimodal ratings do not accurately cap-

ture the information embedded in the reviews and may not express precise details to pro-

spective shoppers. Using predictive modeling, they show the effect of different product

features in the reviews on sales, confirming the importance of the words used in the

reviews to evaluate the products. Chevalier and Mayzlin (2006) show that customers rely

more on the reviews than the rating scores.

In the hotel industry literature, the presence of consumer reviews and ratings are

found to drive sales (Blal and Sturman, 2014, Floyd, et al., 2014, Ye, et al., 2009). Most

of the studies use star ratings and customer ratings as a proxy for the quality in the re-

views but not the words in the reviews. Yet, there is no agreement on i) the relationship

between hotel reviews and quality, and ii) the impact of reviews on price. For examples,

Öğüt and Onur Taş (2012), using star ratings and customer ratings, find that these quality

metrics increase hotels price and online sales. Contrary, a recent study by Viglia, et al.

(2016), finds a positive association between review scores and hotel occupancy rates, but

not a significant relationship between reviews and star ratings, suggesting that these two

measures involve two different concepts of quality, contrary to the existing literature on

reviews and quality.

6

The present study contributes to the online marketing literature on the relationship

between guests’ reviews and quality, and their impact on price. Unlike previous research

that uses review score such as the number of reviews, star rating and customer rating, this

study derives the constructs of quality from the opinions in the reviews with sentiment

analysis. Sentiment analysis is a methodology, often used in computer science, to extract

value, opinions or attitudes toward products or services from reviews (Bautin, et al.,

2008, Hu and Liu, 2004, Pang and Lee, 2008, Ye, et al., 2009). Using data collected from

Airbnb in Boston and a spatial autoregressive hedonic model, the analysis shows that the

price of a room on the platform depends not only on the intrinsic characteristics of the

room and its location, but also on the price set by other hosts in the neighborhood. The

price of a room is also correlated with the quality score derived from sentiment analysis

of its reviews. The spatial nature of the estimation method implies that the quality

measure, derived from the reviews of a room, has not only a direct effect on the room

price but also a spillover effect on the price of rooms in its neighborhood.

The remainder of this paper is organized as follows. In section 2 we give an

overview of the relevant literature. Section 3 presents the conceptual framework. Section

4 introduces the data and the spatial autoregressive estimation method, including a

detailed description of the sentiment analysis methodology. Results of the spatial hedonic

pricing model are presented in section 5. Finally, section 6 concludes.

7

2.3. Literature review

The importance of word-of-mouth (WOM) on consumer purchasing decision has

been widely examined in the economic literature (Brooks, 1957, Kozinets, et al., 2010,

Liu, 2006). WOM contents are user-generated comments, reviews, ratings, and other

communications and are perceived to be more credible than advertising (Mauri and

Minazzi, 2013, Ogden, 2001) since they are real user experiences and not paid ads.

Litvin, et al. (2008) stresses the importance of the independence of the source of the mes-

sage for WOM to be considered as a reliable source of information by customers. This is

well illustrated by Mauri and Minazzi (2013) experimental study where hotel guests re-

views are positively correlated with customers’ hotel purchasing intention, but the pres-

ence of hotel managers’ responses to the guest's reviews leads to a negative correlation

with their purchasing intention. Zhang, et al. (2010) confirm this finding. Using data col-

lected from Dianping.com on restaurants, they compare the popularity of consumers’ re-

views with professional editors’ reviews. Their study shows that consumers-created re-

views are more popular than editors’ reviews, as indicated by the number of page views.

There is a substantial number of studies in economics on the effect of reviews on

sales. De Vany and Walls (1999), Dellarocas, et al. (2007) and Liu (2006) show the im-

pact of reviews on box office revenue. In the service industry, reviews are considered as a

primary source of information on quality (Hu, et al., 2008) as they reduce information

asymmetry, and allow consumers to have better information about the attributes of the

service they want to purchase (Nicolau and Sellers, 2010). Luca (2016), studying the im-

pact of reviews and reputation on restaurant revenue in Washington, finds that a one-star

increase in Yelp’s rating increases a restaurant’s revenue by 5-9 percent. Zhang, et al.

8

(2013), studying the determinants of camera sales, finds that the average online customer

review, as well as the number of reviews, are significant predictors of digital camera

sales.

In the hotel industry, reviews affect hotel room purchase intention, sales, and

price. According to O’Connor (2008), increasing numbers of travelers consult feedback

left by other customers while planning their trip. Gretzel and Yoo (2008) estimate that

75% of travelers use the feedback of other consumers whilst making travel arrangements.

Vermeulen and Seegers (2009), through an experimental study in the Netherlands, con-

firm that online reviews affect consumers’ choice in the hotel industry, but this effect is

asymmetric. Results from their study indicate that positive and negative reviews do not

have the same impact on a consumer’s behavior. Positive reviews have a positive impact,

but negative reviews have a smaller impact in absolute value than positive reviews. With

regard to sales and price, Ye, et al. (2011), exploiting data from a major travel agency in

China, show that a 10 percent increase in traveler rating increases the volume of online

reservations by more than 5 percent. Öğüt and Onur Taş (2012) also find that more posi-

tive online customer ratings increase hotel room prices and online sales.

Quality has many dimensions and measures and customer ratings might only cap-

ture a small part of it. During the rating process, customers may refer not only to the

quality of the product or service but also to its price, or both. Even when referring to the

quality, some features of the product or service are considered more important than oth-

ers, depending on the taste of the customer. Zhang, et al. (2011) show a heterogeneous

impact of rating on hotel room prices. They found the impact to be only noticeable for

economy and midscale hotels and not for luxury hotels where location and the quality of

9

services are the most important factors that determine consumers’ willingness to pay.

They use different ratings such as cleanliness, quality of room, location, and service and

found different impacts of these ratings on price. The findings of Li and Hitt (2010) con-

firms the results of Zhang, et al. (2011). According to Li and Hitt (2010) both quality and

price influence purchase decision. Their empirical analysis on digital cameras shows that

ratings, being in general unidimensional, are biased by prices and are more closely corre-

lated with the product value than its quality. More recently, Viglia, et al. (2016) find a

positive association between review score and hotel occupancy rate. They use diverse

categories of hotels and various online review platforms and find that a one point increase

in the review score increases the hotel occupancy rate by 7.5 percentage points. However,

they did not find any association between review score and star rating. For Viglia, et al.

(2016) review score and star rating might reflect different measures of quality.

There is a need to clarify the relationship between reviews and price. Most of the

studies on the impact of reviews on price and sales in the hotel industry literature use

rating or single review scores that might not represent the complexity of the customer

opinion or sentiment about a good or service accurately. Allowing for a methodology,

such as sentiment analysis, that mines the client's opinion in the reviews is more likely to

depict correctly the quality of the good or service he/she receives. Using sentiment

analysis, this study examines the role of opinions derived from reviews in consumer

valuation and prices. It uses data collected in the short-term apartment rental market on

Airbnb in Boston. The sentiment expressed by the reviews on the platform serves as an

intrinsic indicator of the quality of the service offered by the hosts. The indicator is then

used to empirically test if reviews affect price and if multidimensional ratings have

10

identical effects on price. Our contribution is twofold. First, we use sentiment analysis to

examine how the contents of online reviews could affect prices, rather than relying on

customer ratings. Second, with a unique dataset, we test whether rental rooms’ prices are

spatially correlated, and if so, whether rental prices are strategic complements or

substitutes.

2.4. Conceptual framework

An interesting feature of online platforms, such as Airbnb, is the possibility for

both hosts and guests to learn about each other before accepting the transaction. By facili-

tating direct interaction between participants on two sides, these platforms offer partici-

pants the possibility to control the terms of their interaction; the intermediary does not

take control of these terms (Hagiu and Wright, 2015). On the Airbnb platform, hosts de-

cide on the bundle of services they will offer (bed, couch or sofa, shared bed, Wi-Fi, etc.)

and the price of their service. Guests have the possibility to define the nature and quality

of the services they desire. For hosts, this has direct implications on their competitive-

ness. The quality of reviews left by guests can impact their business positively (if the

review is positive) or negatively (if the review is negative). Hosts can also learn from

their competitors and adjust their price and quality accordingly. This type of interaction

where participants on one side of the network compete is referred to as inside competition

or a same-side negative effect (Eisenmann, et al., 2006).

Unlike studies that rely on a platform economics framework to analyze same-side

network effects, this study uses the vertical product differentiation model to describe

competition in the quality and price space on the Airbnb platform. The product differenti-

ation literature has benefited from the early work of Hotelling (1929) who sets up the

11

foundation for product and price competition in oligopolistic industries. Hotelling (1929)

uses the model of a linear city to study horizontal product differentiation. In the model,

the location represents the different varieties of a product. Consumers incur a linear

transportation cost that increases with the distance that separates them from their ideal

product.

Two consumers who value the products differently will be at different locations,

but if the prices are identical, they will buy from the “closest” firm. A key contribution

of the Hotelling (1929) model is that a duopoly will locate at the center of the linear

market creating a minimum differentiation and offer similar products. In this setup, if

each duopolist sets the same price for their products, both of them will have positive de-

mand and the products are said to be horizontally differentiated.

The assumption of a linear transportation cost is revised by d'Aspremont, et al.

(1979). They considered a quadratic transportation cost function and their model yields,

at the equilibrium, a dispersion of firms instead of the Hotelling (1929) principle of min-

imum differentiation. The assumptions on the cost function have significant implications

on the final result of the model.

Building on the model of horizontal differentiation, many authors have considered

the case where even though the two products are offered at the same price, one captures

the whole demand because of its better quality. This case is referred to as vertical differ-

entiation and has been examined by Mussa and Rosen (1978), Gabszewicz and Thisse

(1979), Shaked and Sutton (1983), and Motta (1993). The conceptual framework used in

this study builds on the vertical product differentiation models of Wauthy (1996) and

12

Motta (1993). Although there are a number of hosts on Airbnb in a city, most of them

compete with a small number of competitor(s) within a range, e.g., one or two miles. We

therefore consider the following two-stage game based on duopolistic competition. Hosts

choose the quality of their room in the first stage, and in the second stage, they compete

for the price given these qualities. We suppose costs are fixed

(

)

=

and are in-

curred during the first stage of the game. At the second stage, as in Motta (1993), firms

incur a constant production cost. The cost for quality development in the first stage is

considered as a sunk cost in the second stage.

Guests have an identical indirect utility function with the following preferences:

=

0

(2.1)

where , is a taste parameter uniformly distributed with unit density. The

mass of guests is

= 1 0 = 1 and the cumulative distribution

(

)

=

is the

fraction of guests with a taste parameter lower than . Guests with higher taste parame-

ters are willing to rent (pay for) a room of higher quality.

the s term represents the quality and the higher the quality of the room, the higher

the utility reached by the guest. We have a high-quality host

and a low-quality one

with

>

and quality differential =

> 0 (2.2)

There is a lower bound to the level of quality since hosts need to meet a minimum

quality standard before posting their room on the platform. Using backward induction, we

will solve for the sub-game perfect Nash equilibrium.

13

A guest is indifferent between quality 1 and quality 2 if he has a taste parameter

that satisfies:

=

=

=

(2.3)

A guest is indifferent between renting on Airbnb and not renting at all if he has a

taste parameter that satisfies:

= 0 =

=

(2.4)

From (2.3) and (2.4) we derive that a guest with a taste parameter

rents the

apartment of quality 2 and the proportion of guests who rent the room of quality 2 is:

(

)

=

=

(2.5)

and guests who rent the room of quality 1 have a taste parameter

>

and their

proportion is:

(

)

=

=

(2.6)

We derive the demands for high and low qualities hosts:

(

,

)

=

(

,

)

=

(2.7)

In Nash equilibrium, firms choose their price to maximize their profit given by:

=

[

)

]

(2.8)

with the constant unit production cost. We can set the constant unit cost to 0 and

the first order condition gives

+

= 0 (2.9)

14

Solving for prices in the first order conditions and using results from equation

(2.7) give the following reaction functions:

=

=

=

=

(

+ )

(2.10)

From equation (2.10) we can derive the equilibrium prices set by the high and

low-quality hosts:

=

=

(2.11)

Motta (1993) shows that these are Nash equilibrium prices. We can also derive:

=

(

)

> 0 (2.12)

Equation (2.12) implies that, in equilibrium, high-quality hosts set higher prices

compared to low-quality hosts.

Substituting (2.12) into (2.7) gives the equilibrium demand:

=

=

(2.13)

Since we are interested in the effect of the rival’s price on the host price, we can

derive:

=

> 0

=

> 0

(2.14)

15

This predicts that prices are strategic complements. When a host increases its

price, its rival also increases his price. When the low-quality host price increases his

price, the response of the high-quality host is stronger than the reaction of the low-quality

host following an increase in price by the high-quality host:

=

<

=

(2.15)

2.5. Data and Methods

2.5.1. Data

The data used in this study are from the Airbnb platform for Boston and were

retrieved from Inside Airbnb

1

during the month of September 2016. Airbnb is a short-

term rental platform that offers lodging to travelers. It connects individuals who want to

rent their apartment to temporary visitors. Airbnb charges both the host and the guest a

service fee by facilitating the transaction between the two parties.

We have data for 2,051 individual hosts on Airbnb in our sample, which is

concatenated with data from other sources. The Airbnb data contains the characteristics

of the apartment offered, its geographic coordinates, the price per night, and the reviews

by previous guests. Using sentiment analysis, the opinions in the reviews are mined, and

1

Inside Airbnb is an independent, non-commercial set of tools that collects and facilitates the access to

publicly available information about a city's Airbnb listings.

16

a score is derived. The mean score of the reviews for each room is used as a proxy for the

quality of the room

2

.

The Airbnb data is combined with economic data for the Boston area derived

from the American Community Survey at the tract level. Shapefiles of the parks, trans-

portation system and central business district are joined to the Airbnb data set using

ArcGIS. Table 2.1 presents a detailed description and the summary statistics of the varia-

bles utilized in this study. We include several key characteristics of an apartment (that is

price, number of persons a room can accommodate, number of bathrooms and bedrooms

in the apartment, score derived from a sentiment analysis of reviews, and the number of

reviews the room received) and some neighborhood variables including the distances to

the nearest convention center and train station and measures of income and education

level.

2

Details on the opinion mining using sentiment analysis are presented in the next section.

17

Table 2.1: Description and summary statistics of the variables

Variable Description Size Mean Std Dev Minimum Maximum

Structural Variables

Price

Apartment rental price (dependent variable) 2,051 165.19 114.49 20 1300

Accommodate

Number of persons the room can accommodate 2,051 3.11 1.86 1.00 16.00

Bathroom

Number of bathrooms in the apartment 2,051 1.18 0.49 0.00 6.00

Bedrooms

Number of bedrooms in the apartment 2,051 1.26 0.79 0.00 5.00

Review score

The score derived from sentiment analysis of the

reviews

2,051 10.81 4.75 -8 47

Number of reviews

Number of reviews per rooms rented on Airbnb 2,051 10.96 12.74 1 82

Neighborhood variables

Convention

Euclidian distance (in feet) to the closest conven-

tion center

2,051 8,247.94 7,716.91 73.97 41,733.11

MBTA

Euclidian distance (in feet) to the closest train sta-

tion

2,051 1,782.66 2,128.51 35.07 17,950.10

Income

Per capita income at the closest census tract 2,051 51,282.59 29,310.81 7,011.00 120,813.00

Graduate

Percentage of the tract median family with at least

a bachelor’s degree

2,051 60.43 23.98 5.40 88.90

2.5.2. Derivation of quality scores with sentiment analysis of the reviews

Natural language processing and linguistic techniques provide the foundation for

sentiment analysis, which has been used in recent years to derive opinions from texts (Hu

and Liu, 2004, Popescu and Etzioni, 2007, Ye, et al., 2009). This approach is used here to

mine the opinions in the reviews left by guests on Airbnb and derive a quality score from

those reviews. AFINN’s general purpose lexicon helped extract the sentiments from the

words used by the reviewers. AFINN was developed by Nielsen (2011) and is a lexicon

based on unigrams (single words). The lexicon contains English words where each uni-

gram is assigned a score that varies between minus five (-5) and plus five (+5). The nega-

tive scores indicate negative sentiments and positive scores indicate positive sentiments.

The newest version of the lexicon, AFINN-111, which contains 2,477 words and phrases,

is used. To perform the analysis on sentiment, the words used in each review are assigned

an opinion score, and the total score of a review is given by the sum of the scores of the

words in that review. Specifically, the following procedure is followed:

- The reviews are cleaned of punctuation, numbers, extra spaces and non-textual

contents.

- Irrelevant words are removed using “stopwords” with English as the language of

reference. Stopwords are words such as “I,” “the,” “a,” “and” that do not add

value to a review.

- Each word is replaced by its stem (the root of the word).

- Each stem is then matched with a word or unigram in the list of sentiment words

in the AFINN lexicon. If a match is found in the lexicon, the stem is attributed the

score of the match.

19

- The final score of a review is the sum of the sum of the scores of positive and

negative matches.

Table 2.2 presents a sample of the reviews and the scores associated with them.

Airbnb estimates that 70% of the guests provide a review on their experience. Only the

reviews written in 2016 were used for our analysis since customers on online platforms

focus on more recent comments (Pavlou and Dimoka, 2006). Our algorithm is built to

detect sentiment in reviews written in English, we use Cavnar and Trenkle (1994) N-

gram-based approach for text categorization to retrieve the reviews written in English.

The N-gram-based approach has been shown to achieve a 99.8% correct classification

rate when used to classify articles written in different languages on the Usernet news-

group (Cavnar and Trenkle, 1994). We use the texcat package (Feinerer, et al., 2013) for

the review categorization. This package replicates and reduces redundancy in the Cavnar

and Trenkle (1994) approach. Figure 2.1 presents the frequency of the languages that ap-

peared in the reviews; notice that almost all the reviews are written in English. On

Airbnb, an automatic review is generated when hosts cancel the booking prior to arrival.

Those reviews are dropped from the dataset. In total, 22,651 reviews were mined and the

average of the review score per room is used as a proxy for the room quality.

Table 2.2: Sample of reviews and their score

Reviews

3

Score

Check-in/check-out was easy and it waa easy to get to the house from the metro station which took me only 5 mins or

even less. The house was clean but only problem was that there was only one bathroom but other than, the house is a

perfect place to stay.

5

We stayed at Alex place for 2 nights and are totally happy that we have chosen it. The bed was comfy, the room was

very nice and the host and her husband are super friendly.

11

This place was a great little place to stay and call you own for how ever long you need. only a few minute walk to the

Boston Commons and public transportation. A lot of great little shops just around the corner. I highly recommend this

place if you just need a little get away for a few days!!! Thanks again Paige

10

The apartment was perfect for our family. Check in and check out was easy, the apartment was clean and quiet, decent

sized kitchen. Location is awesome. We had a great time.

14

3

The reviews are presented as written on Airbnb; we did not correct the typos.

21

Figure 2.1: Frequency of the languages used to write the reviews on Airbnb in Boston

2.5.3. Empirical estimation procedure: The Spatial Autoregressive Model

The Moran’s I statistic and the Lagrange multiplier are used to test for the pres-

ence of spatial effects in the price data. Results of the tests in tables 2.3 and 2.4 indicate

the presence of spatial dependence through the spatial lagged price.

croatian-ascii

latvian

russian-iso8859_5

serbian-ascii

turkish

finnish

indonesian

nepali

slovak-windows1250

basque

breton

hungarian

slovenian-iso8859_2

tagalog

bosnian

esperanto

estonian

irish

slovenian-ascii

albanian

rumantsch

slovak-ascii

polish

icelandic

frisian

manx

latin

danish

czech-iso8859_2

welsh

romanian

scots_gaelic

dutch

norwegian

catalan

swedish

afrikaans

middle_frisian

italian

portuguese

german

spanish

french

scots

english

0 10000 20000

Freq

language

22

Table 2.3: Moran I test

Weights matrix threshold

Moran I

p-value

1 mile

0.19

0.000

3 miles

0.07

0.000

5 miles

0.007

0.000

Table 2.4: OLS regression diagnostic test for spatial dependence

Test

Value and significance per weigh matrix

1 mile

3 miles

5 miles

Spatial autocorrelation (error)

0.009***

-0.0009***

0.0004***

Lagrange Multiplier (SARMA)

67.53***

5.98*

3.58

Lagrange Multiplier (error)

10.28***

0.74

0.36

Lagrange Multiplier (lag)

66.76***

4.70**

3.49*

Robust LM (error)

0.77**

1.27

0.09

Robust LM (lag)

57.25***

5.23**

3.22**

Note: * denotes that the estimates are significant at 10% and ** and *** denote that they are significant at 5% and 1%

level.

Ordinary Least Squares (OLS) is known to produce biased, non-consistent and in-

efficient estimates in the presence of spatial association in the form of spatial dependence

or spatial autocorrelation (Anselin, 1988, Anselin and Bera, 1998), so a spatial hedonic

price model is used for estimation. The spatial autoregressive model (SAR) accounts for

the presence of a spatial lag dependent variable. The model is specified as follows:

= + + (2.16)

where the dependent variable P is the n by 1 vector of the renting prices. The Box-Cox

transformation suggests a log transformation of the price variable as the functional form

that best fits the data. W is an n by n spatial distance matrix. We use 1 mile as the dis-

23

tance threshold. X is an n by k matrix of exogenous explanatory variables with a constant

term vector. It includes the structural characteristics of the apartment such as the number

of bathrooms, the number of people it can accommodate, the type of room, the

cancellation policy, the number of reviews, and the quality of the apartment (sentiment

score). It also includes neighborhood characteristics such as the distance to the nearest

convention center, distance to the nearest bus or train stop, the area’s unemployment rate,

and level of education.

the term is a k by 1 vector of coefficients of the explanatory variables; is the

independent error term which follows a normal distribution with zero mean (0

) and a

constant variance (

); is the price spatial lag (WP) coefficient. Mobley, et al. (2009)

and Mobley (2003) show that the coefficient on the spatial lag price variable identifies

strategic response of hosts to price changes. Price complementarity corresponds to a posi-

tive spatial lag coefficient while substitutability corresponds to a negative spatial lag

coefficient. If the prices are strategic complements, the expectation is that the sign of is

positive.

According to Anselin (1988), estimating equation (2.16) with maximum likeli-

hood will produce consistent and efficient estimates. Contrary to the OLS model, the co-

efficients on the regressors in equation (2.16) are not the marginal impacts of a one unit

increase in their value on the dependent variable (Gravelle, et al., 2014, Le Gallo, et al.,

2003, Lesage, 2008). The reduced form of the equation (2.16) gives the intuition behind

this result:

(

)

= + (2.17)

24

Which can be rearranged as

=

(

)

+

(

)

(2.18)

This is useful in examining the partial derivative of

with respect to change in

the ,

th

variable

:

=

(

)

(

)

(2.19)

The partial derivative here is different from the usual OLS scalar derivative ex-

pression

. Instead, the partial derivative is an n-by-n matrix. The partial derivative on

off-diagonal elements ( ) are different from zero (which would be the case with

OLS). This shows that changes in the explanatory variable of any host on Airbnb can af-

fect the price of all the hosts on the platform. The own partial derivative is referred to as

the direct effect and is captured by the diagonal element of

(

)

(

)

. The

indirect or spillover effect corresponds to the off-diagonal elements of the matrix (when

). Averaged over all observations, these measures give the average direct effect, the

average indirect effect and the average total effect (Lesage, 2008). Changes in the quality

variable are used to illustrate each of these effects. If a host improves the quality of his

room, the average direct effect measures the average impact on price for host (averaged

other all observations). The impact of the change in room quality by all the other hosts on

host ’s price (averaged over all observations) is given by the average indirect effect. Fi-

nally, the total average effect measures the impact on price of changes in all hosts quality.

It is equal to the average direct effect plus average indirect effect.

25

2.6. Econometric results and discussion

Four models were estimated: Model I uses Ordinary Least Squared (OLS); Model

II uses the Spatial Autoregressive (SAR) model with 1 mile as the distance threshold

weight matrix and the spatial lag as the only explanatory variable; Model III is also a

SAR, but with the quality variable added to the spatial lag; and finally, model IV uses all

the explanatory variables. We use the package spdep (Bivand, et al., 2013, Bivand and

Piras, 2015) in R (R Core Team, 2017) for estimations. We also conduct a series of sensi-

tivity tests. First, we perform a linear mixed effects analysis by including a random effect

at the census tract level. Second, we vary the spatial weight matrix by increasing it to 3

and 5 miles. Third, we use a unidimensional measure of quality and six disaggregated

alternative measure of quality.

Results of the OLS regression and maximum likelihood estimation of the Spatial

Autoregressive (SAR) models are presented in Table 2.5. The sign and significance level

of the estimates are consistent across the four models. The AIC is lower in the SAR mod-

els compared to the OLS model, indicating a better fit. The Lagrange Multiplier test on

spatial error dependence in the SAR models does not reveal a spatial dependence in the

residual errors and we use robust standard errors for our estimates.

Results of the theoretical model predict that hosts will compete for prices in the

short-term rental market; prices are expected to be strategic complements. The spatial

autoregressive coefficient is positive and highly significant (e.g., a parameter of 0.33 in

the last SAR specification). This indicates that room prices are strategic complements on

Airbnb in Boston. A price increase by one host leads to a price increase by its neighbors.

26

Table 2.5: Estimates of the spatial lag regressions with 1 mile as weight matrix

Variables

Dependent variable: lnPrice

OLS

SAR

I

II

III

IV

W_LnPrice

0.92***

(0.02)

0.92***

(0.02)

0.33***

(0.08)

Intercept

4.59***

(0.19)

0.35***

(0.11)

0.21***

(0.02)

2.60***

(0.19)

Accommodate

0.12***

(0.01)

0.12***

(0.01)

Accommodate^2

-0.006***

(0.001)

-0.006***

(0.001)

Bathroom

0.08***

(0.01)

0.08***

(0.01)

Bedroom

0.17***

(0.01)

0.17***

(0.01)

Review score

0.01***

(0.001)

0.013***

(0.002)

0.01***

(0.001)

Number of reviews

-0.002***

(0.0005)

-0.002***

(0.0005)

Room_type

Private Room

-0.43***

(0.01)

-0.41***

(0.02)

Shared Room

-0.68***

(0.04)

-0.68***

(0.04)

Cancellation Poli-

cy

Moderate

0.05***

(0.02)

0.06**

(0.06)

Strict

0.02

(0.01)

0.03

(0.01)

Super-strict

0.25***

(0.06)

0.27***

(0.03)

Log Distance

Convention

-0.15***

(0.008)

-0.07***

(0.008)

MBTA

-0.003

(0.009)

-0.005

(0.009)

Log Education

0.09***

(0.01)

0.06**

(0.02)

Log Income

0.06***

(0.01)

0.05**

(0.02)

AIC

1158

3097.2

3064.3

1104.2

LM test for residual autocorrelation

0.77***

0.21

0.44

0.13

Note: * denotes that the estimates are significant at 10% and ** and *** denote that they are significant at 5% and 1%

level. Robust standard errors are in parenthesis

27

The SAR estimates are not the partial derivatives as shown by equation (2.19);

Table 2.6 decomposes the total effect for variables into its direct and indirect compo-

nents.

Table 2.6: Direct, indirect and total effects of the impact of the regressors on room price

Variables

Impacts

Direct

Indirect

Total

Accommodate

0.126***

0.061***

0.188***

Accommodate^2

-0.006***

-0.003***

-0.009***

Bathroom

0.082***

0.040***

0.122***

Bedroom

0.170***

0.083***

0.253***

Review score

0.010***

0.004***

0.015***

Number of reviews

-0.002***

-0.001***

-0.003***

Room_type

Private Room

-0.420***

-0.205***

-0.626***

Shared Room

-0.682***

-0.334***

-1.016***

Cancellation Policy

Moderate

0.063***

0.031***

0.094***

Strict

0.031

0.015

0.046

Super-strict

0.273***

0.134***

0.407***

Log Distance

Convention

-0.076***

-0.037***

-0.114***

MBTA

-0.005

-0.002

-0.008

Education

0.063***

0.031***

0.094***

Income

0.052***

0.025***

0.078***

Note: * denotes that the estimates are significant at 10% and ** and *** denote that they are significant at 5% and 1%

level.

The results of the estimation show that the coefficients of the structural variables

such as the number of persons the room can accommodate, the number of bathrooms, and

the number of bedrooms are positive and statistically significant. Listings with more bed-

rooms, more bathrooms and that can accommodate more persons tend to set higher pric-

28

es. This is consistent with the previous literature on the hotel industry (Cirer Costa, 2013,

de Oliveira Santos, 2016, Espinet, et al., 2003). When a quadratic term for the number of

persons a room can accommodate is included, this variable exhibits a diminishing mar-

ginal effect on price. Changes that increase the number of persons a room can accommo-

date has a larger impact on price for hosts whose rooms accommodate fewer persons than

for hosts whose room accommodate larger number of guests up to the turning point of 10

(0.188/(-2*-0.009)) persons. The number of bedrooms in the apartment has a larger im-

pact on price (25.3) than the number of bathrooms (12.2 percent).

The theoretical model predicts that hosts with high-quality rooms will set a higher

price compared to hosts with low-quality rooms. The coefficient on the review score var-

iable allows us to test if price is affected by room quality. As in the hotel marketing lit-

erature, our estimation result confirms expectation. Review score has a highly significant,

positive and similar coefficient across all the regression models (a parameter of 0.01 in

all specifications in table 5), implying that quality impacts room price. Based on table 6,

the result suggests that a one point increase in review score will increase room price by

1.5 percent. A third of this impact on price comes from the indirect impact from hosts

located nearby (as they increase their prices in response). This confirms the existence of

a spillover effect. The number of reviews also is relevant in explaining price. Airbnb es-

timates that 70% of guests provide a review on their host. The number of reviews is used

to approximate the demand for rooms. The negative sign for the coefficients largely

reflects the law of demand; the demand for higher price rooms is smaller.

Estimates of the impact of the room type on price show that shared rooms and

private rooms, compared to entire homes, are cheaper. A shared room is the cheapest

29

among all three. The coefficients for the dummy variables associated with these variables

are significant and negative. Shared rooms are 68.2 percent cheaper than entire homes

while private room are only 42.0 percent cheaper.

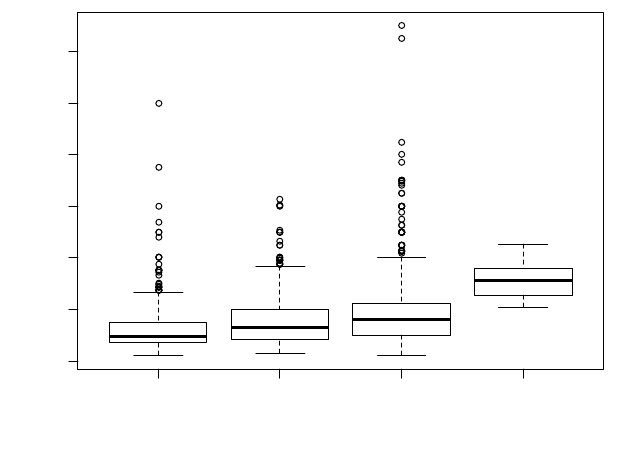

The coefficients on the dummies for cancellation policies show that, compared to

a flexible cancellation policy, hosts who use moderate, strict and super-strict cancellation

policies set higher prices. The cancellation policy can be seen as a segment differentiation

strategy by hosts. As figure 2 shows, average price increases with stricter cancellation

policies.

Figure 2.2: Boxplot of price by cancellation policy

To test if the impact of review varies by lodging segment, the SAR model was run

for each segment. Results in table 2.7 indicate that, except for moderate cancellation poli-

cy, the impact of quality on room price decreases as we move from flexible to super-strict

cancellation policy. The impact of quality on price for super-strict cancellation policy

flexible moderate strict super_strict_30

0 200 400 600 800 1000 1200

Cancellation Policy

Price

30

segment is not significant at 5% confidence level. Zhang, et al. (2011) found similar re-

sults when studying the determinants of hotel room prices. When considering lodging

segments, they found a positive impact of quality on room price for economy and mid-

scale hotels. However, for luxury hotels, quality does not affect room price. For the high-

er lodging segment, quality is no longer a differentiation factor. In Boston, all the hosts

who use a super-strict cancellation policy offer an entire home or apartment for rent on

the Airbnb platform. For these hosts, the quality of their room is already embedded in the

type of room they offer.

Table 2.7: Decomposition of the impact of review score for flexible, moderate, strict and

super strict cancellation policies

Segments

Impacts of review score

Direct

Indirect

Total

Flexible

0.015***

0.001***

0.016***

Moderate

0.003***

0.001***

0.005***

Strict

0.010***

0.001***

0.012***

Super strict

0.007*

0.000*

0.007*

Note: * denotes that the estimates are significant at 10% and ** and *** denote that they are significant at 5% and 1%

level.

Proximity to amenities has been shown to affect the price in hedonic price models

in previous studies. Our results indicate that only the distance to the nearest convention

center has the sign and significance level as expected. Participation in conferences for a

short-term period is among the reasons guests book rooms on Airbnb. The results of our

estimation support why hosts that are located closer to convention centers set higher pric-

es compared to hosts that are located further away from them. A one percent decrease in

the distance that separates a room from the nearest convention center leads to a 0.11 per-

31

cent increase in price. The distance to the closest train station does not affect price, as ev-

idenced by the non-significance of its coefficient.

Among the socioeconomic variables, the coefficients for education and income

per capita are positive and significant. We attribute the result to the theory of demand for

housing (Green and Hendershott, 1996). Neighborhoods with higher education and

income levels are more desirable, increasing the demand for houses in those

neighborhoods. High demand leads to high rental prices and consequently high prices for

the rooms rented on Airbnb. A one percent increase in the percentage of families with at

least a bachelor degree in the census tract where the room is located leads to a 0.09

percent increase in the room price. A similar change in income leads to a 0.07 percent

increase in price.

2.7. Sensitivity analysis

A series of alternative specifications are estimated for robustness checks. The es-

timation procedure is replicated with a linear mixed effects model. The same controls are

used as fixed effects variables. A random effect at the census tract level is added to char-

acterize idiosyncratic variation that is due to census tract differences. The census tract

might be a source of non-independence that needs to be considered within the model. We

test for the significance of the spatial lag price and review score variables using likeli-

hood ratio tests. P-values are obtained, and a likelihood ratio test is performed on the full

model with respect to the spatial lag price and with respect to the review score against the

model without these variables. The lme4 package (Bates, et al., 2015) is used in the es-

timation of the linear mixed model estimation. Table 2.8 presents the log-likelihood ratio

test results.

32

Table 2.8: Likelihood ratio tests for the statistical significance of price lag and quality

variables in the linear mixed effects models

Estimates

AIC

BIC

LogLik

Deviance

Chi-

square

Test for lag

price

Model without price lag

1069

1170

-516

1033

Model with price lag

0.25

1039

1146

-500

1001

31.82***

Test for quali-

ty

Model without quality

1083

1184

-523

1047

Model with quality

0.007

1039

1146

-500

1001

45.92***

Note: * denotes that the estimates are significant at 10% and ** and *** denote that they are significant at 5% and 1%

level.

The results of the linear mixed effects models confirm the spatial autoregressive

model results. The spatial lag price affects price (χ2 (1) = 31.82, p=0.000), increasing it

by about 0.33 ± (0.05). The review score also affects room price (χ2 (1) = 45.92,

p=0.000) increasing it by 0.01 ± (0.001). The coefficients for both review score and lag

prices are consistent with our assumption. Prices are strategic complements, and hosts

with rooms of high-quality set higher prices compared to hosts of low-quality rooms.

The full SAR specification regression is also estimated with 3 and 5 miles as

weight matrices. Increasing the threshold of the weight matrix allows the hosts to have a

larger number of competitors. The results of the estimates are presented in table 2.9. The

sign of the estimates for both the review score and the spatial lag price is consistent with

the results obtained using 1 mile as a weight matrix. The size of the spatial lag coefficient

estimates in the 3 and 5 miles weight model are lower than its size in the 1-mile weight

model, indicating, not surprisingly, that competition between hosts decreases as we in-

crease the distance between them. Hosts located further away from each other compete

less.

33

Finally, for a sensitivity check, other measures of quality are considered. First, we

use a unidimensional measure of quality that measures the overall satisfaction of the

guests. The unidimensional measure is similar to the single rating score commonly used

on many online platforms. Second, we consider six disaggregated measures of quality,

which are ratings by guests of specific aspects of the services provided by their hosts.

These measures are accuracy, cleanliness, check-in, communication, location and the

value of the apartment. The quality measure related to the accuracy of the listing reflects

how accurate the description of the apartment on the Airbnb platform is compared to the

guest’s experience. The quality rating cleanliness evaluates the cleanliness of the property

including the rooms, bathrooms and common areas. The quality of the check-in relates to

how welcome the guest felt when he/she first arrived.

Communications with the hosts as a quality measure provides an evaluation of

how long it takes the host to respond and the accuracy and usefulness of the host’s re-

sponses. A quality variable for the satisfaction of the guest about the location of the

apartment in the neighborhood and its proximity to amenities is also considered. The last

quality measure used for sensitivity check is related to the value of the listing, which

evaluates the guest satisfaction with paying the room rate for the service received.

34

Table 2.9: Estimates of the spatial lag regression with 3 and 5 miles as weight matrix

Variables

SAR

3 miles

5 miles

W_LnPrice

0.09**

(0.05)

0.14**

(0.05)

Intercept

3.86***

(0.56)

3.86***

(0.56)

Accommodate

0.12***

(0.01)

0.12***

(0.01)

Accommodate^2

-0.006***

(0.00)

-0.006***

(0.00)

Bathroom

0.08***

(0.01)

0.08***

(0.01)

Bedroom

0.17***

(0.01)

0.17***

(0.01)

Review score

0.01***

(0.00)

0.01***

(0.00)

Number of reviews

-0.002***

(0.00)

-0.002***

(0.00)

Room_type

Private Room

-0.43***

(0.01)

-0.43***

(0.01)

Shared Room

-0.68***

(0.05)

-0.68***

(0.05)

Cancellation Policy

Moderate

0.06***

(0.02)

0.05***

(0.02)

Strict

0.03

(0.01)

0.02

(0.01)

Super-strict

0.26***

(0.06)

0.25***

(0.06)

Log Distance

Convention

-0.14***

(0.00)

-0.15***

(0.00)

MBTA

-0.002

(0.01)

0.001

(0.01)

Education

0.09***

(0.01)

0.09***

(0.01)

Income

0.06***

(0.01)

0.06***

(0.01)

AIC

1121

1123

LM test for residual autocorrelation

7.26***

0.15

Note: * denotes that the estimates are significant at 10% and ** and *** denote that they are significant at 5% and 1%

level. Robust standard errors are in parenthesis

35

Results of the coefficients of these variables in the OLS and SAR regressions

(specified as in model IV with all the explanatory variables) are presented in table 2.10.

The results of the regressions of price on each of the different measures of quality show

consistent, positive and significant coefficients providing substantial evidence to support

the theoretical hypothesis that quality affects the price. The average impact of each of the

quality variables on the price was decomposed into its direct, indirect and total effect.

The results presented in table 2.11 show that for all the quality variables the average di-

rect effect on room price is higher than the indirect effect. Policies that provide an incen-

tive for hosts to improve the quality of their room have a direct positive impact on the

price of their room on Airbnb but also an indirect positive impact on the other hosts in

their neighborhood.

The size of the unidimensional measure of quality is significantly lower compared

to the other measures. Among the disaggregated measures of quality, cleanliness has the

highest impact on price (6.8 percent) followed by accuracy (4.9 percent). Value has the

lowest impact (3.2 percent). The impact of the unidimensional measure of quality on

price is less than one-third of the impact of value, the lowest disaggregated measure of

quality. This confirms Li and Hitt (2010) results where the unidimensional measure of